Developing an Object Detection App for the Blind

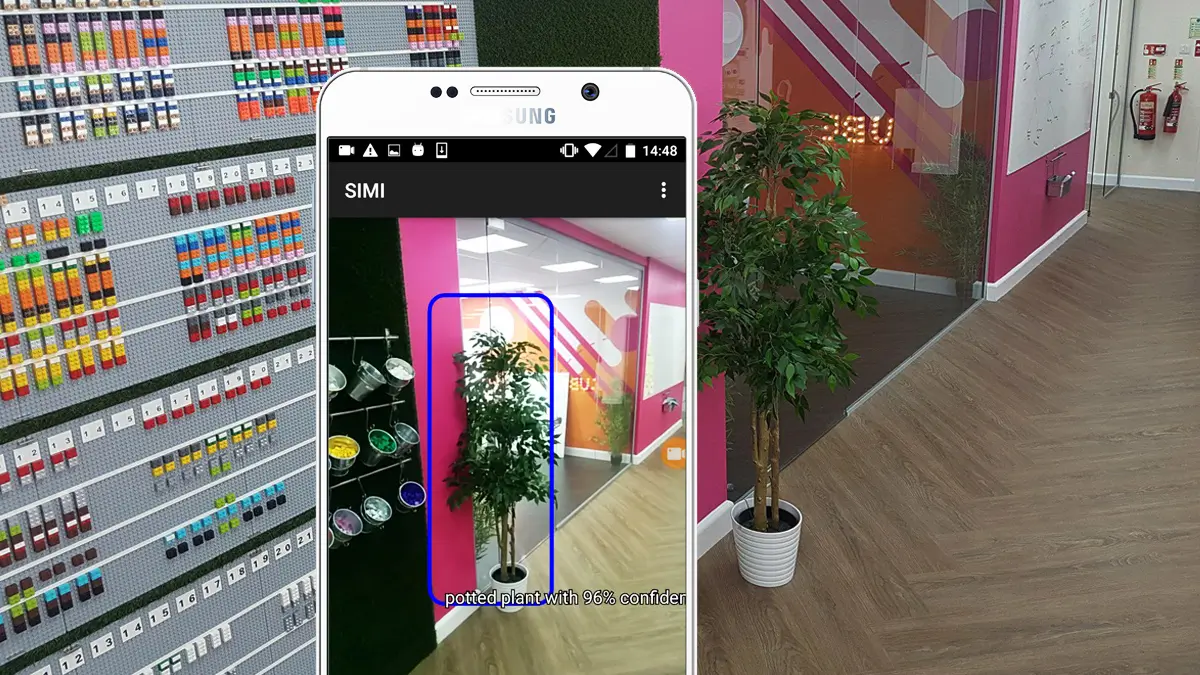

SIMI is an object recognition app for the visually impaired. Our Android Developer Nikos brought this 'Tech For Good' project to life to aid those with sight loss or visual impairments. After a few improvements and shiny new features, the app can now recognise who and what is in front of you, answer your questions, scan barcodes and call or send messages to emergency contacts.

Being inspired to help those living with visual impairments.

As a developer working in an agency that collaborates with charities all over the world, it’s inevitable that at some point I’d be influenced by these amazing organisations.

Seeing first-hand the impact that these charities are having has made me not only want to grow as a developer but as a person more so.

The last time I was inspired to be a better person?

Working on a project with Vista. This project has been one of my favourites to date, an incredible charity with an incredible cause that does Tech For Good a favour and has a genuine passion for improving the lives of their community.

Vista is one of the oldest and largest local charities in Leicester... We’ve been working with people with sight loss and their families for over 150 years.

One of Vista’s aims; ‘Provide support and care for people with sight loss.’ struck me in-particular as something I could help with during my week of Innovation Time.

Having built a vision screening app previously, I wanted to implement a mechanism that will make the daily lives of these people with visual impairments a whole lot easier.

Making an app that mimics human eyes: What can SIMI do?

SIMI (pronounced See-Mee) aspires to be a tool that makes the daily lives of those living with visual impairments a little easier.

Here are the elements that allow him to do so…

'It's an emergency!'

Arguably the most important feature is SIMI’s ability to help in times of crisis. If someone who is visually impaired has an accident or emergency, (like getting hurt/lost/overwhelmed) they will be able to ask SIMI to make a call for them.

The ‘First Contact’ feature allows you to add emergency contacts in case of (you guessed it) an emergency. This means that you can quickly call or notify these people when time is of the essence by telling SIMI to ‘Call Dad’ (for example).

''What's in front of me?'

SIMI’s object recognition means that he can not only recognise what/who is in front of you but can even recognise a familiar face. Meaning SIMI could be like “Hey James” and you’d know exactly who it was approaching you.

The ‘What I see’ feature allows SIMI users to send a picture of what the app is seeing to their ‘First Contacts’. This can help users get a second opinion on the apps’ recognition capability or simply share a picture of something nice with your loved ones.

This could even go as far as to warn you when you’re about to step out onto a busy road. Or let you know when it’s safe to cross.

'What's it like outside?'

Ask SIMI questions like “what’s the weather like?” and it’s API will collect data on your query and feedback with the answer.

With the inclusion of GPS features, SIMI is also able to tell you things like your location and guide you to your desired location (these could be places like your bathroom or the local shop).

'Once upon a time...'

Another cool feature is that SIMI can scan the barcodes of items it recognises, like books for example, and search the internet to find the product and offer channels to purchase it.

Taking this forward I want SIMI to be able to actually read the books too, another way of improving the lives of those with visual impairments.

How I built the object recognition app.

Since I wanted to help as many people as possible within this community, it was crucial to avoid limitations to the project, such as storage space, RAM, camera quality and versions of Android…

The app was designed to work on Android Lollipop (v21), but with a little modification, could also work on Android KitKat (v19). Which translates to support 80.7% and 93.6% of the Android user population.

Tensorflow: A computation library.

If you’re familiar with object detection, then you have undoubtedly heard of TensorFlow (a graph computation library).

With the vast majority of developers using this software to construct deep neural networks.

OpenVC: A machine learning library.

This and OpenVC (an open-source computer vision and machine learning library).

These two libraries are best suited for object detection, and another benefit is that they can be used separately or together.

In my case?

Baring in mind I have limited experience in object detection, I found that the configuration was easiest with TensorFlow, plus we found pre-trained models for object detection.

Integrating speech recognition.

My first attempt was to use the default Android speech recogniser. But I found that it doesn’t support continuous speech recognition, and on top of this, you have to have internet access (unless targeting Android Marshmallow version or newer).

Moreover, the recogniser is not really configurable (you can’t set a keyword – ‘Hello Google‘).

DroidSpeech: A native Android API.

DroidSpeech (unlike the default recogniser) supports continuous speech detection (plus its open-source software), but at the same time also requires internet access and does not support keyword searches.

PocketSphinx: A speech recognition engine.

PocketSphinx is a lightweight speech recognition engine, specifically tuned for handheld and mobile devices (although it’s just as effective on desktop). The library supports continuous speech detection and doesn’t require internet access.

But the best thing about the library is the fact that it supports keyword and phrase searches.

SpeechRecogniser: A speech recognition library.

In the app’s first version, SIMI only supported the Pocketsphinx library, which is good at detecting keywords but not great at understanding full sentences.

In the end, I implemented SpeechRecogniser, a library that I’d actually rejected in the early stages of development. Now, the app can switch between the two libraries to make sense of what you’re saying.

The future for SIMI?

One thing that has become apparent since starting my Innovation Time is that I have made far too many assumptions. As a prototype, SIMI worked well in recognising speech, detecting objects and interacting with the user.

However, the problems SIMI solves are quite obvious/simple ones. To take the mobile app development project forward, the first thing I’d want to do is sit down with members of this community and get them to discuss the problems they are facing…

So SIMI can become the answer!

Published on April 20, 2018, last updated on March 15, 2023